- 05 Jan, 2023

- read

- Majid Fatemian

The older I get, the more I accept my flaws, and I have many. One is the inability to estimate how long a project would take. I estimate it would take three months, which could easily take six months when the actual implementation starts.

Although this sounds terrible, I’m sure I’m not alone. Well, probably not as far off as I am. This is because we don’t know what we don’t know.

The Unknown Unknowns

When we start a project, a new functionality within an existing system, or a migration, we do a proof of concept. Everything looks great at the beginning. But as we progress in the implementation with more details, we face some unknowns that we never considered. Not everything goes according to the plan or to what documentations say it should.

This is when the challenges start to creep up one after the other, to the point that we have to decide: Pivot or Persevere? Should we push through the challenges and get over them, change directions or make a U-turn?

In this article, I share my learning from facing this decision a few times and share what we as a team used to help us to make the best decision.

Case Studies

I will provide two examples to elaborate on the key learnings. Here is a brief description of them:

1 - Migration of a Data Lake Processing

We had an existing big data pipeline written in APache Spark/Scala for the amount of traffic the company was dealing with back in 2016. With the rapid growth speed of Red Ventures and after a few very successful acquisitions, the big data platform was no longer efficient in handling the new amount of traffic, the rate of growth, and the required end-to-end latency.

The ask was:

- Scalability - Increase the ability to scale to accommodate large fluctuations in traffic

- Reduced Latency - The existing process took about 45 minutes to prepare the data in the lake. It needed to be there in under 10 minutes.

- Facilitate the operations - The process and the code had grown so much that it was difficult to maintain and operate.

So we decided to take processing out of Apache Spark jobs and migrate them to Serverless Architectures.

2 - Migration of an Identities graph

Red Ventures helps people discover and decide. Therefore the Customer Data Platform is at the heart of all the technologies. We used to have the identities graph stored and operated off of a non-relational database, having the graph model and traversal algorithms in the application layer. But as the name implies, an identity graph consists of a graph. So we started investigating how it would look to store the identity graph data in a graph database. The aim was to delegate the complex graph traversal algorithms to the database engine. It could help us have a more straightforward application code and simpler compliance enforcement. Graph databases technology was new to the team, and we had a lot to learn, as it was a different paradigm of modelling and persisting the data.

Now that we have a quick introduction to the case studies, let’s dive into what we learned and the most important guides in the cases we need to decide between pivoting or persevering.

1. The FEEDBACK loop

In the book, The LEAN STARTUP, Eric Ries says:

The fundamental activity of a startup is to turn ideas into products, measure how customers respond, and then learn whether to pivot or persevere. All successful startup processes should be geared to accelerate that feedback loop.

In my opinion, a new project, functionality or migration of systems is not much different. The shorter the feedback loop, the faster the team can decide whether to pivot or persevere.

Trying to make the experience as close to a real-world example as possible. That’s why contrary to popular belief, I encourage to:

Go into the production environment as early as possible.

The sooner we start dealing with real-world scenarios, the sooner we catch the hidden challenges. In the case of the two case studies I introduced at the beginning, they went smoothly till we started dealing with the production volume of data. Granted, we launched in production in a shadowed mode, not impacting the current expectation and behaviour of the system. But if we had dealt with the production data sooner, it would have been easier to catch the case.

Migration of the data lake

Only after we started to process the production data we realized that we were generating an ocean of small files due to the significant decrease in end-to-end latency. It was terrible because it made data usage impossible for downstream business users. Previously they had to fetch maybe hundreds of files intra-day. But now they had to deal with thousands of tiny files. Reading these small files was so significant that it affected the query performances in orders of magnitude. Yes, the data was available much faster, but what happened afterwards regarding the data processing was significantly impacted.

Migration of the Identity Graph

When we started with the new Graph Database in production, things were blazingly fast as we had started with a blank slate. Only after we migrated our existing customer’s historical data did we see a significant impact on the system’s performance. Some customer identity graphs had become big, and now we could see the effect on traversal. Hence the impact on the performance of the system.

2. ZOOM OUT

The technical challenges are sometimes very blinding. We see one challenge and try to come up with one solution. As soon as we find the solution, it may cause another challenge and gets us busy solving the new one. Again and again. It pushes us down a rabbit hole to the point that we don’t notice the divergence from the primary goal.

In our case of migrating the Identity Graph to a Graph database, we couldn’t leverage our existing tech stack. Most of our tech stack is in Go. But the chosen graph database engine didn’t have an official Go toolset, and the unofficial ones were having problems that we had to roll them out for our case.

Hence we used Serverless AWS Lambda functions to read from the main Kafka stream, process and push to the Graph database engine.

After that, we faced the inability of Lambda trigger for Kafka to scale more than the number of partitions in the stream. To overcome that, we delayed the processing to another step. We created a Lambda function that reads from the Kafka writes to an SQS queue and fan-out there so the Lambda function can read off that queue. SQS and Lambda scale much better together.

At the time, we were not seeing what we were creating. We were adding a lot more architectural complexity to our system, and our goal was to simplify our codebase.

If we had zoomed out at each step and verified where we were versus what is the goal we were trying to achieve, it would have been a lot easier to see the problem we were creating.

That’s why technical challenges are sometimes blinding. We get hit by one, try to solve it, then the next one and so on—death by a thousand cuts. Results contradict the goals.

3. Ask for HELP!

In difficult situations like this, you are not alone. You don’t need to make the decision all by yourself. It’s a team effort. Even if you’re a team of one, you have resources.

Search for experts within the organization. Other experts might have gone through a similar path, and their perspectives could help and facilitate the decision-making.

If you have vendors for services like Cloud computing, they usually offer help and consultation. Their insight is significant and very valuable as they have exposure to many customers.

Finally, the community is usually very supportive. You can reach out to the experts and get their feedback.

4. COMMUNICATION

As with most other situations in life, our challenges are mostly communication problems. The same thing applies to engineering and software engineering. I believe that:

Software Engineering is more about COMMUNICATION than Coding

In situations where we feel stuck, communicating it early on with the leadership and product team will help them to be aware of the problem. So later on, when more challenging decisions need to be made, nobody is surprised. Also, the process of decision-making becomes collaborative.

Technical Leadership, Product and Project management all add different perspectives to the situation that will help to ease that decision.

5. PRODUCT! The ultimate guide

All of the efforts are related to the product in some way or another. It’s either enhancing its capabilities and performance, adding functionalities or using a better service or technology. So the product itself is the best guideline to look up to—the northern star.

Are the efforts:

- Adding business values?

- Reducing the technical debts?

- Are we aligned with what the customers are asking?

- Are the changes within the required or desired Service Leve Agreements?

So we did the same. We put what’s the most important for our product as a guide. Then started evaluating the situation based on that.

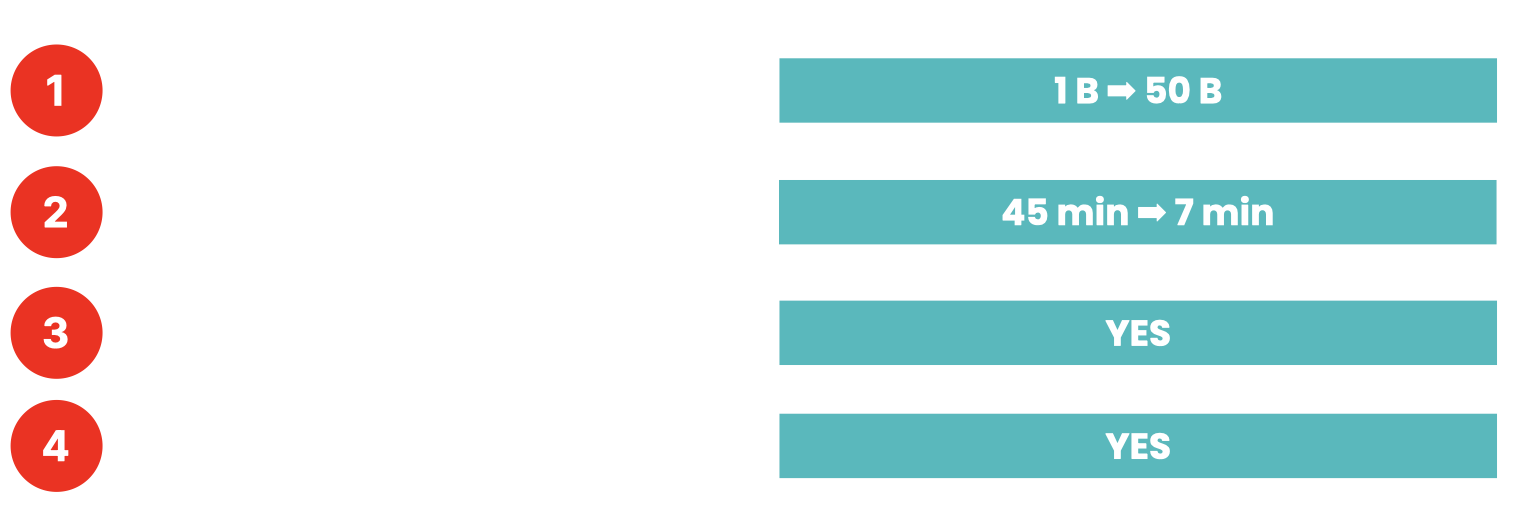

In use case #1, migration of the data lake, we started asking ourselves:

- Are we improving the scalability? Yes! We were able to enhance the scalability by at least 50X.

- Are we reducing the latency? Yes! We have reduced the latency from 45 minutes to less than 7.

- Are we making Operations and Maintenance easier? Yes! The Serverless approach would make things much easier to maintain and operate.

- Is the new product a drop-in replacement for the existing one? Yes! There is zero impact on downstream consumers. No change was needed.

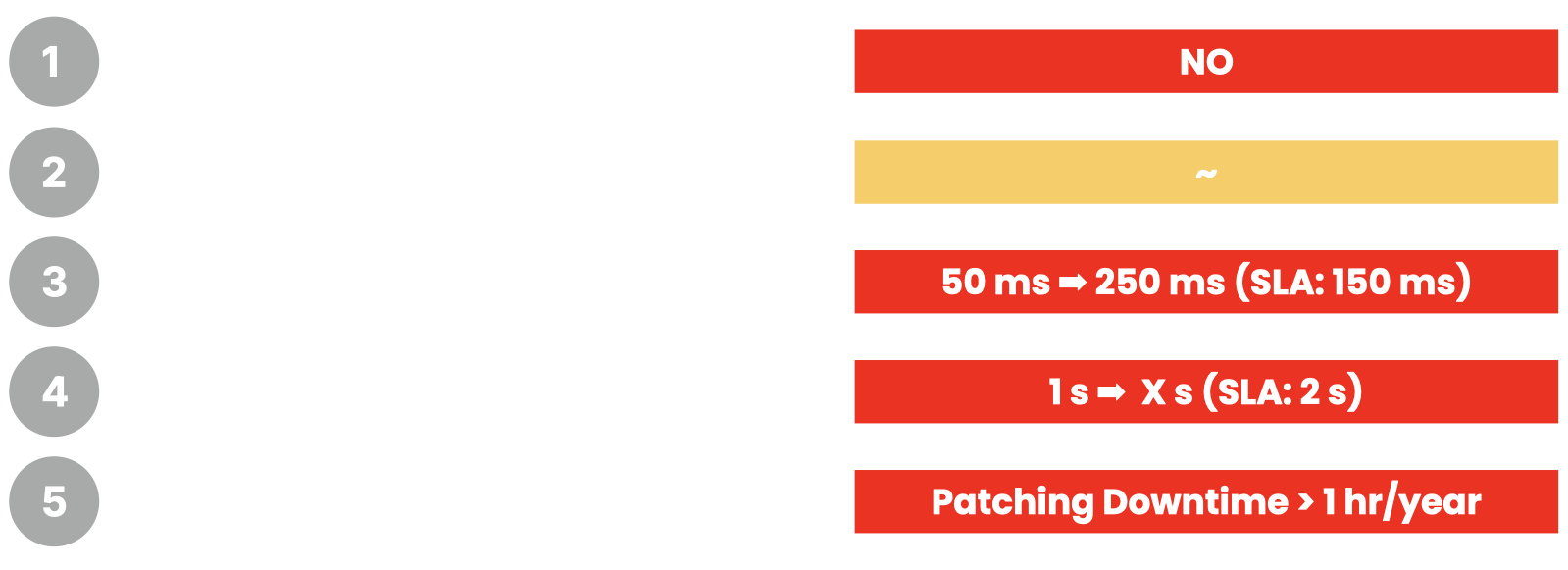

For use case #2, migration of the identity graph to a graph database, we once again put the product as the ultimate goal and started evaluating the situation:

- Are we simplifying the code base? Yes! But we had added so much architectural complexity. We traded one for the other. The architectural complexity was far more than the code simplicity we brought.

- Are we within our profile evaluation SLA of under 150 milliseconds? No! But we used to be. For larger customer graphs, traversing the whole graph would take much longer.

- Are we within our data processing SLA of under 2 seconds? No! Again, we used to be and lost it due to all the complexity we added to our architecture.

- Are we within our availability/resiliency tier, which requires us to have %99.95 availability? No! Again we used to be. Although the graph database engine we chose was a managed solution, it still had a downtime of patching and upgrading for about 30 minutes per month, automatically disqualifying us from the required resiliency tier.

6. Either way is SUCCESS

We should not consider pivot as failure. When we are too involved in a technical challenge sometimes fall into the trap of cost fallacy. We have spent so much time and energy on the topic that it seems like a waste to pivot. We have invested so much in it. But we need to consider that it is a success as soon as we stop the waste and the bleeding.

It's never too late to mend

That documentation will help us remember what was made at the decision and why. We tend to forget the reasoning behind our choices. And that would cause falling into a circle. We might come back a few months later and try something we have already tried and tested. Therefore the documentation of each decision we make is essential.

In conclusion, situations, when we need to decide on Pivot or Persevere are inevitable. But with the approaches above, we can bring this moment early on in a project and be equipped to make that an easy decision.

You can also find the executive summary of this article posted on Red Ventures' Inspired blog, here.